ABSTRACT

No rest! No days off! Close by all day, every day nonstop energetic work, welcome to the universe of Artificial Intelligence, in what manner would humans be able to coordinate the wonders of AI. Mankind is in the grasp of a relentless, and energizing future with the future improvement of AI, people must conclude whether to ride on the positive momentum of AI or oppose the inescapable difference on the planet as we probably are aware of it. The genuine distinct advantage will be the business accessibility of Quantum Computing. People must figure out how to function, live, learn and connect with AI or become peasants. Enormous information is the soul of AI and Quantum Computing handling force will empower future PCs to deal with inconceivable measures of huge information. Will people handle the occasion to improve with AI or stand up to? people get the opportunity to create with AI through Brain Computer interface (BCI) innovation. Security and guidelines must be set up, with inquiries of “Who is answerable for AI security and guidelines”? furthermore, “would AI be able to be trusted as a self-governing substance” likewise the moral utilization of AI must be tended to, “Shouldn’t something be said about the rights and morals of AI”?. Humankind is on an inescapable way of AI strength, the inquiry is “will people and AI be companions or foes”?

WHAT IS AI?

From SIRI to automated vehicles, artificial intelligence (AI) is advancing quickly. While sci-fi regularly depicts AI as robots with human-like attributes, AI can incorporate anything from Google’s inquiry calculations to IBM’s Watson to self-sufficient weapons.

Artificial intelligence today is appropriately known as narrow AI (or weak AI), in that it is intended to play out a tight errand (for example just facial acknowledgment or just web look or just driving a vehicle). In any case, the drawn out objective of many researchers is to create general AI (AGI or solid AI). While narrow AI may beat people at whatever its particular assignment is, such as playing chess or illuminating conditions, AGI would outperform humans at nearly every intellectual undertaking.

WHY RESEARCH AI SAFETY

In the close to term, the objective of keeping AI’s effect on society helpful motivates research in numerous territories, from financial aspects and law to specialized subjects such as verification, validity, security and control. Though it might be minimal in excess of a minor irritation if your PC crashes or gets hacked, it turns into even more significant that an AI framework does what you want it to do on the off chance that it controls your vehicle, your plane, your pacemaker, your automated exchanging framework or your power grid. Another momentary test is preventing a devastating arms race in deadly self-ruling weapons.

In the long haul, a significant inquiry is what will occur if the journey for solid AI succeeds and an AI framework turns out to be superior to people at all intellectual assignments. As brought up by I.J. Good in 1965, planning more intelligent AI frameworks is itself a psychological errand. Such a framework might go through recursive personal growth, setting off an intelligence blast abandoning human intellect far. By designing progressive new advancements, such a genius might help us eradicate war, illness, and destitution, thus the creation of strong AI might be the greatest occasion in human history. Some specialists have communicated concern, however, that it may likewise be the last, except if we figure out how to adjust the objectives of the AI with our own before it becomes ingenious.

There are some who question whether strong AI will actually be accomplished, and other people who demand that the production of incredibly smart AI is guaranteed to be valuable. At FLI we perceive both of these conceivable outcomes, but also perceive the potential for a artificial intelligence framework to intentionally or unexpectedly cause extraordinary mischief. We accept research today will assist us with bettering plan for and forestall such possibly negative consequences later on, in this manner appreciating the advantages of AI while maintaining a strategic distance from entanglements.

Read More:

Transboundary Pollution from China and Its Impact on South Korea: A Road to Health and Environmental Degradation

BY WHAT MEANS CAN AI BE DANGEROUS ?

Most specialists concur that a hyper-genius AI is probably not going to display human feelings like love or disdain, and that there is no motivation to anticipate that AI should turn out to be purposefully altruistic or malevolent. Instead, while thinking about how AI might become a danger, specialists think two situations in all probability:

- The AI is customized to accomplish something devastating: Autonomous weapons are artificial intelligence systems that are modified to murder. In the possession of an inappropriate individual, these weapons could undoubtedly cause mass setbacks. Moreover, an AI weapons contest could coincidentally prompt an AI war that additionally brings about mass losses. To abstain from being defeated by the adversary, these weapons would be designed to be very hard to simply “turn off,” so people could plausibly lose control of such a circumstance. This danger is one that is available even with slender AI, yet develops as levels of AI insight and self-rule increment.

- The AI is modified to accomplish something useful, however it builds up a damaging technique for accomplishing its goal: This can happen at whatever point we neglect to completely adjust the AI’s objectives to our own, which is strikingly troublesome. On the off chance that you ask a loyal shrewd vehicle to take you to the air terminal as quick as could be expected under the circumstances, it might get you there pursued by helicopters and shrouded in puke, doing not what you needed however actually what you requested. On the off chance that a AI is entrusted with an eager geoengineering venture, it might wreak destruction with our ecosystem as a symptom, and view human endeavors to stop it as a danger to be met.

As these models represent, the worry about cutting edge AI isn’t malice however competence. A incredibly smart AI will be very acceptable at achieving its objectives, and if those objectives aren’t lined up with our own, we have an issue. You’re presumably not a detestable insect hater who steps on ants out of malevolence, yet in case you’re accountable for a hydroelectric efficient power vitality task and there’s an ant colony dwelling place in the area to be overflowed, not good enough for the ants. A key objective of AI security research is to never put humankind in the situation of those ants.

THE FUTURE IS NOW: AI’S IMPACT IS EVERYWHERE

There’s essentially no significant industry current AI — all the more explicitly, “limited AI,” which performs target capacities utilizing information prepared models and frequently falls into the classifications of profound learning or AI — hasn’t just influenced. That is particularly evident in the previous not many years, as information collection and analysis has increase extensively on account of vigorous IoT availability, the multiplication of associated gadgets and ever-speedier PC handling.

A few divisions are toward the beginning of their AI venture, others are veteran explorers. Both have far to go. In any case, the effect artificial intelligence is having on our current day lives is difficult to disregard:

Transportation: Although it could take a decade or more to immaculate them, autonomous vehicles will one day ship us from spot to place.

Manufacturing: AI fueled robots work close by people to play out a tight range of errands like gathering and stacking, and prescient examination sensors keep gear running smoothly.

Healthcare: In the relatively AI-nascent area of medical care, illnesses are all the more rapidly and precisely analyzed, drug disclosure is accelerated and smoothed out, virtual nursing assistants screen patients and huge analysis assists with making a more customized understanding experience.

Education: Textbooks are digitized with the assistance of AI, beginning phase virtual mentors help human educators and facial examination measures the feelings of pupils to help figure out who’s battling or exhausted and better tailor the experience to their individual needs.

Media: Journalism is harnessing AI, as well, and will keep on profiting by it. Bloomberg utilizes Cyborg innovation to help comprehend complex budgetary reports. The Associated Press utilizes the common language capacities of Automated Insights to deliver 3,700 procuring reports stories for each year — almost multiple times more than in the ongoing past.

Customer Service: Last yet scarcely least, Google is chipping away at an AI right hand that can put human-like calls to make arrangements at, state, your local boutique. Besides, the system gets setting and subtlety.

However, those advances (and various others, including this harvest of new ones) are just the start; there’s considerably more to come — more than anybody, even the most farsighted prognosticators, can understand.

“I think anybody making assumptions about the capabilities of intelligent software capping out at some point are mistaken,”

says David Vandegrift, CTO and co-founder of the customer relationship management firm 4Degrees.

With organizations spending almost $20 billion collective dollars on AI items and administrations yearly, tech goliaths like Google, Apple, Microsoft and Amazon burning through billions to make those items and services, colleges making AI a more noticeable aspect of their individual educational programs (MIT alone is dropping $1 billion on another school dedicated exclusively to computing, with an AI center), and the U.S. Division of Defense increasing its AI game, enormous things will undoubtedly occur. A portion of those advancements are well headed to being completely understood; some are simply hypothetical and might remain so. All are problematic, for better and possibly more terrible, and there’s not a single decline to be found.

“Lots of industries go through this pattern of winter, winter, and then an eternal spring,” former Google Brain leader and Baidu chief scientist Andrew Ng told late last year. “We may be in the eternal spring of AI.”

THE IMPACT OF AI ON SOCIETY

‘HOW ROUTINE IS YOUR JOB?’: NARROW AI’S IMPACT ON THE WORKFORCE

During a talk the last fall at Northwestern University, AI master Kai-Fu Lee supported AI innovation and its inevitable effect while additionally taking note of its reactions and restrictions. Of the previous, he cautioned:

“The bottom 90 percent, especially the bottom 50 percent of the world in terms of income or education, will be badly hurt with job displacement…The simple question to ask is, ‘How routine is a job?’ And that is how likely [it is] a job will be replaced by AI, because AI can, within the routine task, learn to optimize itself. And the more quantitative, the more objective the job is—separating things into bins, washing dishes, picking fruits and answering customer service calls—those are very much scripted tasks that are repetitive and routine in nature. In the matter of five, 10 or 15 years, they will be displaced by AI.”

In the stockrooms of online monster and AI stalwart Amazon, which buzz with in excess of 100,000 robots, picking and pressing works are still performed by people — however that will change.

Lee’s belief was as of late repeated by Infosys president Mohit Joshi, who at the current year’s Davos gathering told the New York Times, “People are looking to achieve very big numbers. Earlier they had incremental, 5 to 10 percent goals in reducing their workforce. Now they’re saying, ‘Why can’t we do it with 1 percent of the people we have?’

PREPARING FOR THE FUTURE OF AI: Toward Truly Intelligent Artificial Intelligence

The most confounded abilities to accomplish are those that require connecting with unhindered and not recently arranged environmental factors. Planning frameworks with these capacities requires the mix of improvement in numerous zones of AI. We especially need information portrayal dialects that classify data about a wide range of kinds of articles, circumstances, activities, etc, just as about their properties and the relations among them—particularly, circumstances and logical results relations. We additionally need new calculations that can utilize these portrayals in a strong and proficient way to determine issues and answer inquiries on practically any subject. At long last, given that they should obtain a practically boundless measure of information, those frameworks should have the option to adapt persistently all through their reality. In whole, it is basic to plan frameworks that join observation, portrayal, thinking, activity, and learning. This is a significant AI issue as we actually don’t have a clue how to incorporate these segments of insight. We need intellectual structures (Forbus, 2012) that coordinate these segments satisfactorily. Coordinated frameworks are a key initial phase in some time or another, accomplishing general AI.

Lecturing at London’s Westminster Abbey in late November of 2018, universally prestigious AI master Stuart Russell joked (or not) about his “formal agreement with journalists that I won’t talk to them unless they agree not to put a Terminator robot in the article.”

His jest uncovered a conspicuous scorn for Hollywood portrayals of far-future AI, which incline toward the exhausted and prophetically catastrophic. What Russell alluded to as “human-level AI,” otherwise called artificial general intelligence, has for quite some time been grub for dream. However, the odds of its being acknowledged at any point in the near future, or by any means, are quite thin. The machines very likely won’t rise (sorry, Dr. Russell) during the lifetime of anybody perusing this story.

“There are still major breakthroughs that have to happen before we reach anything that resembles human-level AI,” Russell stated. “One example is the ability to really understand the content of language so we can translate between languages using machines… When humans do machine translation, they understand the content and then express it. And right now machines are not very good at understanding the content of language. If that goal is reached, we would have systems that could then read and understand everything the human race has ever written, and this is something that a human being can’t do… Once we have that capability, you could then query all of human knowledge and it would be able to synthesize and integrate and answer questions that no human being has ever been able to answer because they haven’t read and been able to put together and join the dots between things that have remained separate throughout history.”

That is a significant piece. Also, a brain full. Regarding the matter of which, copying the human mind is really troublesome but then another explanation behind AGI’s still-speculative future. Long-lasting University of Michigan engineering and computer science educator John Laird has led research in the field for quite a few years.

“The goal has always been to try to build what we call the cognitive architecture, what we think is innate to an intelligence system,” he says of work that is to a great extent motivated by human brain science. “One of the things we know, for example, is the human brain is not really just a homogenous set of neurons. There’s a real structure in terms of different components, some of which are associated with knowledge about how to do things in the world.”

That is called procedural memory. At that point there’s information dependent on broad realities, a.k.a. semantic memory, just as information about past encounters (or individual realities) that is called episodic memory. One of the ventures at Laird’s lab includes utilizing common language directions to train a robot basic games like Tic-Tac-Toe and riddles. Those guidelines ordinarily include a depiction of the objective, an overview of lawful moves and disappointing circumstances. The robot disguises those orders and uses them to design its activities. As could be, however, forward leaps are delayed to come — more slow, at any rate, than Laird and his kindred specialists might want.

“Every time we make progress,” he says, “we also get a new appreciation for how hard it is.”

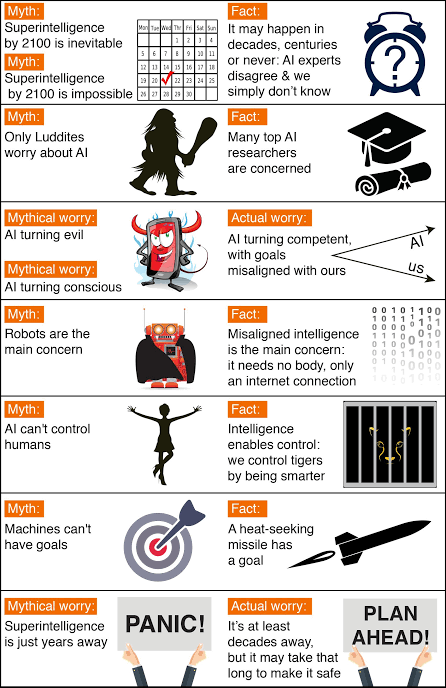

THE TOP MYTHS ABOUT ADVANCED AI

A captivating conversation is occurring about the eventual fate of artificial intelligence and what it will/should mean for humanity. There are intriguing debates where the world’s driving specialists deviate, for example, AI’s future effect hands on market; if/when human-level AI will be created; regardless of whether this will prompt an insight blast; and whether this is something we ought to welcome or fear. But there are also numerous instances of exhausting pseudo-contentions brought about by individuals misjudging and talking past each other. To assist ourselves with zeroing in on the fascinating discussions and open inquiries — and not on the mistaken assumptions — we should clear up the absolute most normal fantasies.

SOME FINAL THOUGHTS

Regardless of how insightful future artificial intelligence become—even broad ones—they will never be equivalent to human insights. As we have contended, the psychological improvement required for all perplexing knowledge relies upon connections with nature and those cooperations depend, thusly, on the body—particularly the insightful and motor systems. This, alongside the way that machines won’t follow a similar socialization and culture-procurement measures as our own, further strengthens the end that, regardless of how complex they become, these insights will be not quite the same as our own. The presence of insights not at all like our own, and in this manner outsider to our qualities and human needs, calls for reflection on the conceivable moral constraints of creating AI. In particular, we concur with Weizenbaum’s confirmation (Weizenbaum, 1976) that no machine ought to actually settle on completely independent choices or offer guidance that call for, in addition to other things, intelligence conceived of human encounters, and the acknowledgment of human qualities.

The genuine threat of AI isn’t the profoundly doubtful mechanical peculiarity created by the presence of theoretical future counterfeit superintelligences; the genuine risks are as of now here. Today, the calculations driving Internet web indexes or the suggestion and individual aide frameworks on our cellphones, as of now have very sufficient information on what we do, our inclinations and tastes. They can even derive what we think about and how we feel. Admittance to huge measures of information that we produce deliberately is essential for this, as the investigation of such information from an assortment of sources uncovers relations and examples that couldn’t be recognized without AI procedures. The outcome is a disturbing loss of protection. To evade this, we ought to reserve the privilege to possess a duplicate of all the individual information we create, to control its utilization, and to conclude who will approach it and under what conditions, instead of it being in the possession of enormous organizations without realizing what they are truly doing with our information.

The way to genuinely intelligent AI will keep on being long and troublesome. All things considered, this field is scarcely sixty years of age, and, as Carl Sagan would have watched, sixty years are scarcely the flicker of an eye on a grandiose time scale. Gabriel García Márquez put it all the more beautifully in a 1936 discourse (“The Cataclysm of Damocles”): “Since the appearance of visible life on Earth, 380 million years had to elapse in order for a butterfly to learn how to fly, 180 million years to create a rose with no other commitment than to be beautiful, and four geological eras in order for us human beings to be able to sing better than birds, and to be able to die from love.”

REFERENCES

- Holland, J. H. 1975. Adaptation in Natural and Artificial Systems. Ann Arbor: University of Michigan Press.

- Weizenbaum, J. 1976. Computer Power and Human Reasoning: From Judgment to Calculation. San Francisco: W .H. Freeman and Co.

- Tegmark, M. (n.d.). How to get empowered, not overpowered, by AI. Retrieved from https://www.ted.com/talks/max_tegmark_how_to_get_empowered_not_overpowered_by_ai

- (2015, May 22). Professor Stuart Russell – The Long-Term Future of (Artificial) Intelligence. Retrieved from https://www.youtube.com/watch?v=GYQrNfSmQ0

- Pre-competitive Collaboration in Pharma – Future of Life … (n.d.). Retrieved from https://futureoflife.org/data/documents/PreCompetitiveCollaborationInPharmaIndustry.pdf?x17135

- The Myth Of AI. (n.d.). Retrieved from http://edge.org/conversation/the-myth-of-ai

WRITER Samia Ehsan Department of Anthropology University of Dhaka